The 'Angel of the North' Testing Anti-Pattern

The Angel of the North in Tyne and Wear, England - photo by Seth M.

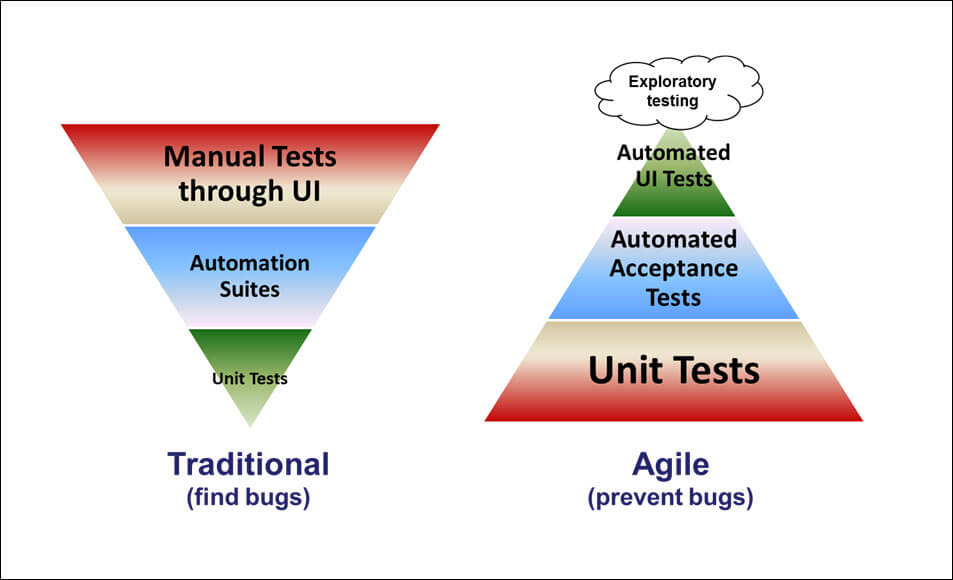

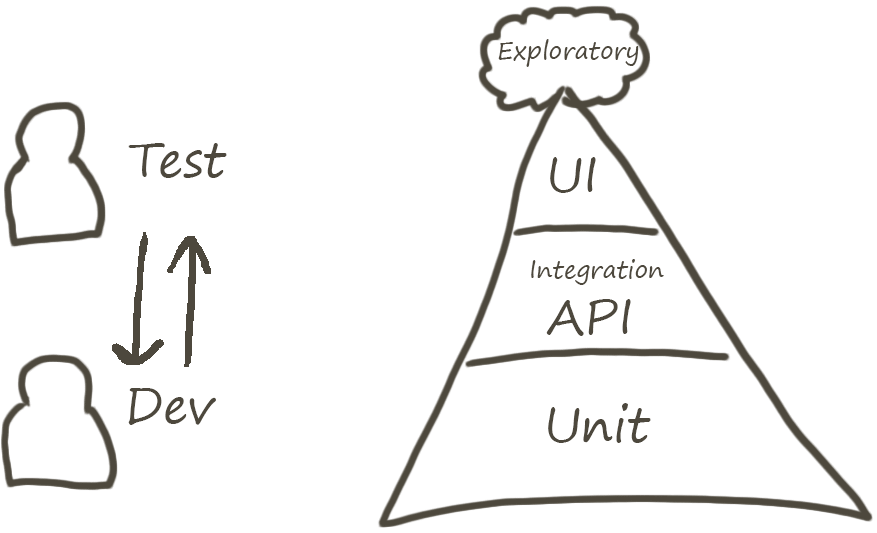

The following diagram is from an excellent post about the agile testing pyramid.

I found the discussion of two different philosophies - “find bugs” vs. “prevent bugs” especially insightful. Running most1 tests before code is committed will prevent most bugs vs. catching/finding most bugs after they were committed and given to a test team (or users!).

However, some of the projects I’ve seen could be modelled in a different way.

Firstly, here are my interpretations of the two most common versions of the test pyramid.

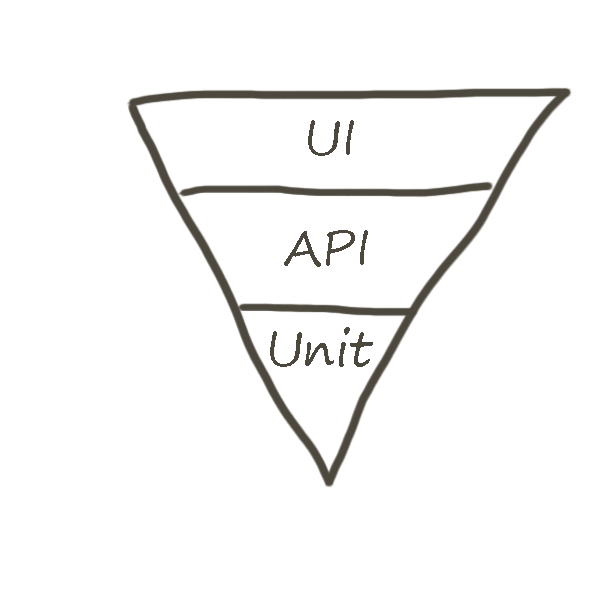

The “Traditional” Testing Pyramid

This is how I view the “Traditional” (inverted) Pyramid.

It shows very few unit-level tests, slightly more integration-level tests and relatively more UI tests. The UI tests are written as steps in a test script document created from “requirements” - this often creates waste if the scripts need to repeatedly change after being executed.

This approach can do a good job of catching defects before you ship, however, this seems wasteful. Effort is predominantly being spent catching-and-fixing bugs and not preventing bugs being checked-in.

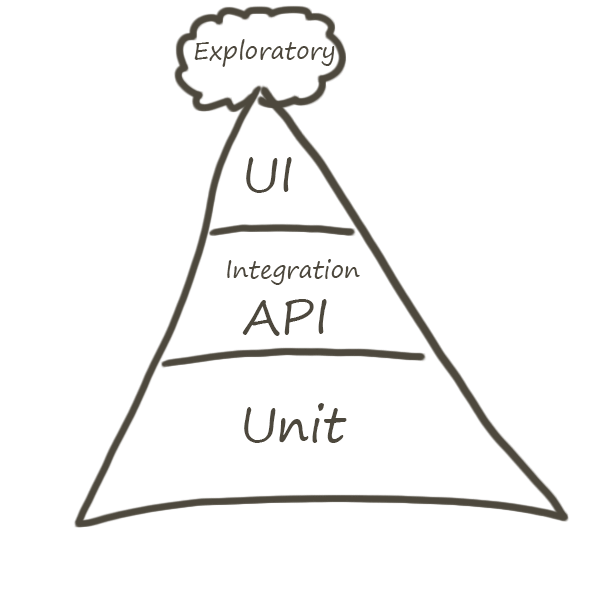

The Agile Testing Pyramid

This feels the right way™ to do software testing, at least if your goals are to be agile or to maintain a steady2 long-term3 pace of development.

I view the layers in the agile test pyramid as follows: automated unit4 tests, integration (API) tests and UI tests, along with some manual exploratory testing.

Layer Variation

Other versions I’ve seen sub-divide the API layer and some, for example Roger’s above, label the middle layer “acceptances tests” or “automated suites” (in the traditional version).

Mike Cohn calls it the service layer but doesn’t just mean web services, he also thinks the service layer vital but easy to forget.

Anti-Pattern

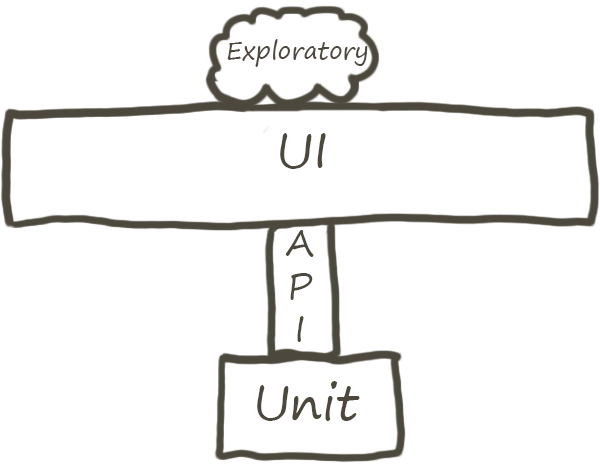

As my variation isn’t a recommended pattern I decided to dub it an “anti-pattern”.

The Testing “Angel of the North” Anti-Pattern

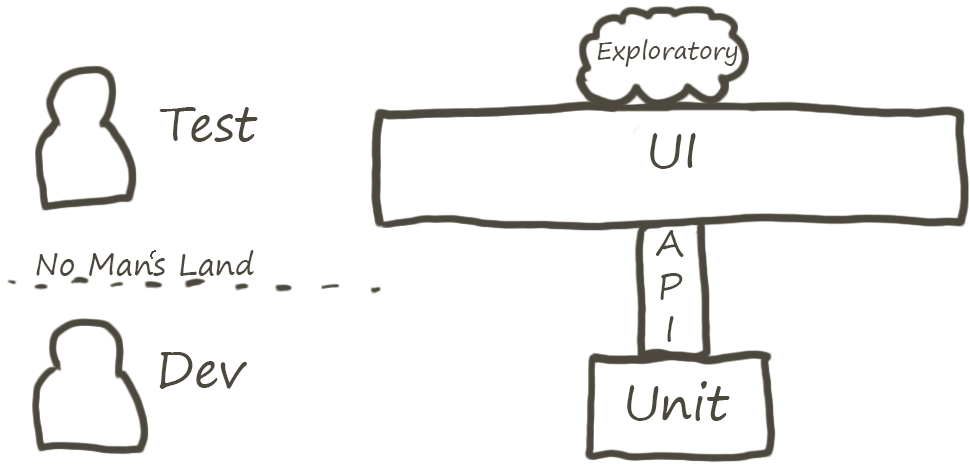

Here is my attempt to model some of the projects that I’ve seen.

It’s a hybrid of the two pyramids, as you have:

- slightly more unit tests,

- (almost) no API tests,

- a similar amount of UI tests (with varying degrees of automation)

- some exploratory testing.

It’s an example of adopting some of the techniques but not the philosophy or principles of agile.

Conway’s Law/Team Structure

Thoughtworks introduced the Cupcake Anti-Pattern, which was a version of the agile test pyramid but with more focus on the team structure.

The “Angel approach” is also a symptom of the team structure - largely symptomatic of the division between dev and test.

The test team are responsible for “testing”, they write and run UI level tests (and might automate some proportion of these). The developers are typically being pressured into developing code faster (so they think or are told - they have little time to test or refactor). No-one takes responsibility for API level testing, the developers don’t see it adding enough value and the testers are often uncomfortable due to the technical nature of these tests.

Problems With The Angel Approach

The main issue I see is that defects are found late and take a long time to fix (and then re-test). Ultimately this leads to much longer iterations and less frequent releases, thus more risk builds up between releases, which people try to mitigate with even more checks/gates etc. This delay further increases if the team is using some flavour of Scrummerfall or WaterScrumFall and the if tests are not reliably automated. One technique for dealing with this was to dedicate a whole sprint, immediately before each release, to bug fixing/finding/re-testing.

Also, developers are afraid to refactor; without a quick way to feel confident your refactor hasn’t broken anything (usually achieved with a good set of unit-level tests) it simply won’t happen because it hurts too much when, not if, a mistake is made.

Responsibilities are not shared very widely meaning people (or sub-teams) can be single points of failure. This also means that capacity is reduced and automation creation/maintenance are one of the first things to get dropped, especially as UI automation is higher in cost in the first place due to the amount of change and (relatively) slow performance.

Finally, test coverage will be difficult to ascertain and certain areas will not be automated because either it would be too difficult to automate via the UI and/or the code wasn’t designed to be tested, thus becomes too hard to test at a unit-level.

Automation

I think the problems happen regardless of how much UI automation you have. (I assume all unit and API-level tests are automated!) In my experience the feedback cycle is too slow if the following can’t happen within an hour of a commit:

- compile,

- run unit tests,

- deploy the project,

- run API and UI tests and check results.

Certainly context is always important to consider and, as with most things to do with complex systems of people and software, this isn’t a universal statement.

The quality of the architecture, readability of the code, and the quality and coverage of the unit-level testing seems to have more of a bearing on how many bugs get through to the test team and how quickly they are fixed.

Moving From Angel To Agile

Presuming you have a reason to move (i.e. to reduce the risk of the above problems) - where do you start? You probably need to start with the people. The project team along with internal and external stakeholders.

If you can’t spend an unknown about of time changing your people and your test (and maybe even code) architecture then “big bang” obviously isn’t a good idea.

As you can see, a lot of these problems are highly interrelated, therefore it becomes difficult to know where to start, or if to start at all. However, it’s also clear the team will slow down (in the short-term) while any changes are being made that are not directly related to new features.

I don’t have the answer, because it entirely depends upon your context, but I will discuss some of my thoughts in a future post around moving from Angel to Agile.

Further Reading

- The Forgotten Layer

- Agile Testing the Agile Test Automation Pyramid

- Evolution of the Testing Pyramid